Burlingame, CA – July 16, 2024 – Quadric® today introduced the Chimera™ QC Series family of general-purpose neural processors (GPNPUs), a semiconductor intellectual property (IP) offering that blends the machine learning (ML) performance characteristics of a neural processing accelerator with the full C++ programmability of a modern digital signal processor (DSP). The third-generation implementation of the Chimera architecture, the QC family includes both single core and multicore cluster offerings as well as safety-enhanced versions of both.

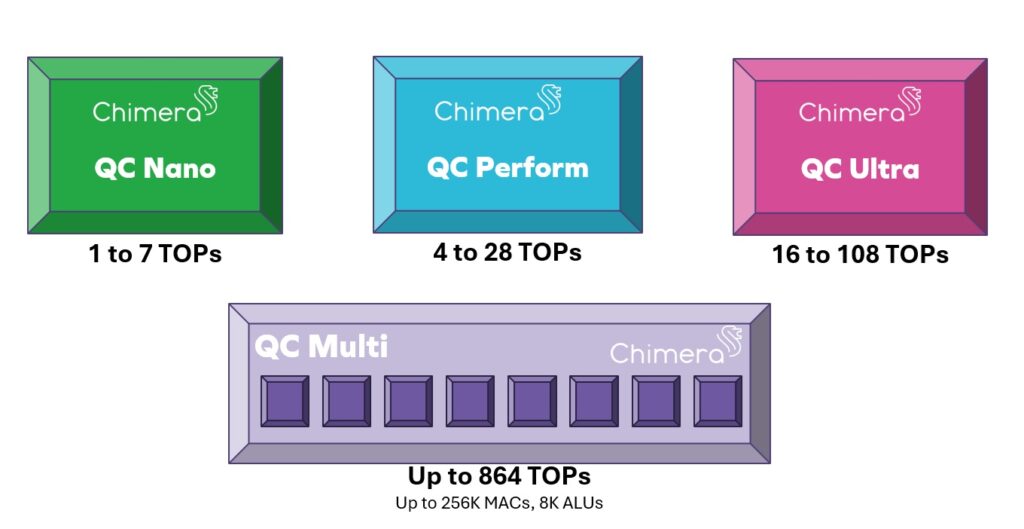

Building upon the successful Chimera QB series GPNPUs introduced in late 2022, the evolutionary QC series adds more configurability to tailor the mix of performance characteristics to match the expected ML inference workload anticipated for a particular system on chip (SoC) design. The QC series includes three configurable single-core processor options: the Chimera QC Nano processor delivering up to 7 TOPs of ML horsepower, the Chimera QC Perform processor packing up to 28 TOPs of performance, and the Chimera QC Ultra processor that cranks out 108 TOPs.

For systems demanding even higher performance, the new QC-M family of multicore GPNPUs offers pre-integrated clusters of two, four or eight of the QC Nano, QC Perform or QC Ultra building block cores. The QC-M family thus scales from running small workloads in parallel (Nano cores) all the way up to high-compute applications (eight QC Ultra cores). This performance provides Level 4 central ADAS applications with 864 TOPs for crunching multiple large input format camera streams in parallel. QC-M clusters include inter-core communications circuitry as well as streaming weight sharing functions for broadcasting common machine learning model weights to two or more cores in a cluster.

ADAS Compute Chiplets for Less than $10

“The remarkable compute density of the QC Series GPNPU cores is a significant breakthrough for the automotive market,” said Quadric co-founder and CEO Veerbhan Kheterpal. “A component supplier in the automotive market building a 3nm chiplet could deliver over 400 TOPs of fully C++ programmable ML + DSP compute for Software Defined Vehicle platforms for a die cost of well under $10,” continued Kheterpal. “Compare that price-performance to existing solutions that repurpose $10,000 datacenter GPGPUs or performance-limited mobile phone chipsets redirected into the automotive market.”

Greater Configurability to Match Compute to Workload

The QC series processors include a range of configuration options designed to allow the SoC developer to match the GPNPU capability to the target application. The Chimera architecture blends high-performance multiply-accumulate (MAC) units with fully C++ programmable 32-bit fixed point ALUs in each Processing Element (PE). An array of PEs is scaled from 64 to 1024 PEs to build the Nano, Performance and Ultra cores. Each configured GPNPU core can have a ratio of 8, 16 or 32 INT8 MACs for each PE. Designers targeting systems with large, weight-bound workloads such as Large Language Models (LLMs) will choose the 8 MAC configuration with wide AXI interfaces. Designers building systems operating on more MAC-intensive workloads such as high-resolution image processing will choose the higher ratio 32 MAC per ALU option. And a 16-bit floating point multiple-accumulate unit at half the throughput rate of the INT8 MACs is a configurable option for each processor.

The cycle-accurate Chimera Instruction Set Simulator that accompanies the Quadric Chimera GPNPU enables design teams to fully simulate target workloads to make smart choices about MAC ratios, AXI widths, tightly coupled Level 2 RAM size, and other user selectable hardware options. Compared to the previous generation Chimera processor offering, the new configuration options for Chimera QC cores can deliver up to 2.7X higher TOPS/mm2 compute density.

Optimizations for Generative AI

Many design teams today are wrestling with how to best implement the most energy efficient machine learning compute engine to run today’s – and tomorrow's – generative AI models. LLMs in particular have massive sets of coefficients (weights) that must be streamed into the chosen compute engine for each token generated, making those models I/O limited in many instances. Quadric’s Chimera QC series adds an option to use 4-bit weights trained in the most advanced training tools, reducing data bandwidth requirements compared to standard 8-bit integer weights. Coupled with extra-wide AXI interconnect interfaces up to 1024-bits / cycle, the new QC Series cores directly address the needs of companies seeking to implement low-power, high-performance LLM models in volume consumer devices.

Safety Enhanced Versions for Automotive Applications

The QC processor series and the multicore QC-M processor family both are offered in Safety Enhanced Versions that combine hardware enhancements to ensure greater error resiliency. Each SE version core is coupled with FMEA analysis reports and collaborative DIA report generation all backed by the Chimera Software Development Kit toolchain that is undergoing ISO 26262 tool confidence level certification.

Scalable Performance: 1 TOP to 864 TOPs

The QC series of the Chimera family of GPNPUs includes three individual cores and multicore clusters of two, four or eight cores:

Chimera cores can be targeted to any silicon foundry and any process technology. The entire family of QB Series GPNPUs can achieve up to 1.7 GHz operation in 3nm processes using conventional standard cell flows and commonly available single-ported SRAM.

Proven in Silicon, Ready for Evaluation

The Chimera processor architecture has already been proven at-speed in silicon. Quadric is ready for immediate customer engagement by chip design teams looking to start an IP evaluation. For more information and details on the Chimera architecture and the QC Series of GPNPUs visit the Quadric.io website.

About Quadric

Quadric Inc. is the leading licensor of fully programmable general-purpose neural processor IP (GPNPU) that runs both machine learning inference workloads and classic DSP and control algorithms. Quadric’s unified hardware and software architecture is optimized for on-device ML inference, providing up to 840 TOPs and automotive-grade safety enhanced versions.