If you are comparing alternatives for an NPU selection, give special attention to clearly identifying how the NPU/GPNPU will be used in your target system, and make sure the NPU/GPNPU vendor is reporting the benchmarks in a way that matches your system needs.

When evaluating benchmark results for AI/ML processing solutions, it is very helpful to remember Shakespeare’s Hamlet, and the famous line: “To be, or not to be.” Except in this case the “B” stands for Batched.

Batch-1 Mode Reflects Real-World Cases

There are two different ways in which a machine learning inference workload can be used in a system. A particular ML graph can be used one time, preceded by other computation and followed by still other computation in a complete signal chain. Often these complete signal chains will combine multiple, different AI workloads in sequence stitched together by conventional code that prepares or conditions the incoming data stream and decision-making control code in between the ML graphs.

An application might first run an object detector on a high-resolution image to find pertinent objects (people, cars, road obstacles) and then subsequently call on a face identification model (to ID particular people) or a license plate reader to identify a specific car. No single ML model runs continuously because the compute resource is shared across a complex signal chain. An ML workload used in this fashion is referred to as being used in Batch=1, or Batch-1 mode. Batch-1 mode is quite often reflective of real-world use cases.

The Batched Mode Alternative

The alternative is Batched mode, wherein the same network is run repeatedly (endlessly) without interruption. When used in Batched mode, many neural processing units (NPUs) can take advantage of selected optimizations, such as reuse of model weights or pipelining of intermediate results to achieve much higher throughput than the same machine running the same model in Batch-1 mode. Batched Mode can be seen in real world deployments when a device has a known, fixed workload and all the pre- and post-processing can be shifted to a different compute resource, such as a CPU/DSP or a second ML NPU.

Check The Fine Print

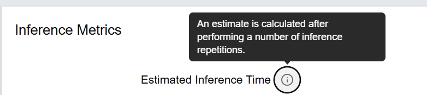

Many NPU vendors will only report their Batched results, because there can be substantial Inferences-Per-Second differences between Batch-1 and Batch N calculations. An example screenshot of a popular silicon vendor’s website shows how this Batching “trick” can be presented. Only when the user clicks the Info popup does it become clear that the reported inference time is “estimated” and assumes a Batch mode over an unspecified number of repetitions.

If you have to do a lot of digging and calculations to discover the unbatched performance number, perhaps the NPU IP vendor is trying to distract you from the shortcomings of the particular IP?

How to Get Gimmick-Free Data

If you want unfiltered, full disclosure of performance data for a leading machine learning processing solution, head over to Quadric’s online DevStudio tool. In DevStudio you will find more than 120 AI benchmark models, each with links to the source model, all the intermediary compilation results and reports ready for your inspection, and data from hundreds of cycle-accurate simulation runs on each benchmark model so you can compare all the possible permutations of on-chip memory size options, assumptions about off-chip DDR bandwidth, and presented with a fully transparent Batch=1 set of test conditions. And you can easily download the full SDK to run your own simulation of a complete signal chain, or a batched simulation to match your system needs.