There are a couple of dozen NPU options on the market today. Each with competing and conflicting claims about efficiency, programmability and flexibility. One of the starkest differences among the choices is the seemingly simple question of what is the “best” choice of placement of memory relative to compute in the NPU system hierarchy.

Some NPU architecture styles rely heavily on direct or exclusive access to system DRAM, relying on the relative cost-per-bit advantages of high-volume commodity DRAM relative to other memory choices, but subject to the partitioning problem across multiple die. Other NPU choices rely heavily or exclusively on on-chip SRAM for speed and simplicity, but at high cost of silicon area and lack of flexibility. Still others employ novel new memory types (MRAM) or novel analog circuitry structures, both of which lack proven, widely-used manufacturing track records. In spite of the broad array of NPU choices, they generally align to one of three styles of memory locality. Three styles that bear (pun intended) a striking resemblance to a children’s tale of three bears!

The children’s fairy tale of Goldilocks and the Three Bears describes the adventures of Goldi as she tries to choose among three choices for bedding, chairs, and bowls of porridge. One meal is “too hot”, the other “too cold”, and finally one is “just right”. If Goldi were faced with making architecture choices for AI processing in modern edge/device SoCs, she would also face three choices regarding placement of the compute capability relative to the local memory used for storage of activations and weights.

In, At or Near?

The terms compute-in-memory (CIM) and compute-near-memory (CNM) originated in discussions of architectures in datacenter system design. There is an extensive body of literature discussing the merits of various architectures, including this recent paper from a team at TU Dresen published earlier this year. All of the analysis boils down to trying to minimize the power consumed and the latency incurred while shuffling working data sets between the processing elements and storage elements of a datacenter.

In the specialized world of AI inference-optimized systems-on-chip (SoCs) for edge devices the same principles apply, but with 3 levels of nearness to consider: in-memory, at-memory and near-memory compute. Let’s quickly examine each.

In Memory Compute: A Mirage

In-memory compute refers to the many varied attempts over more than a decade to meld computation into the memory bit cells or memory macros used in SoC design. Almost all of these employ some flavor of analog computation inside the bit cells of DRAM or SRAM (or more exotic memories such as MRAM) under consideration. In theory these approaches speed computation and lower power by performing compute – specifically multiplication – in the analog domain and in widely parallel fashion. While a seemingly compelling idea, all have failed to date.

The reasons for failure are multiple. Start with the fact that widely used on-chip SRAM has been perfected/optimized for nearly 40 years, as has DRAM for off-chip storage. Messing around with highly optimized approaches leads to area and power inefficiencies compared to the unadulterated starting point. Injecting such a new approach into the tried-and-true standard cell design methodologies used by SoC companies has proven to be a non-starter. The other main shortcoming of in-memory compute is that these analog approaches only perform a very limited subset of the compute needed in AI inference – namely the matrix multiplication at the heart of the convolution operation. But no in-memory compute can build in enough flexibility to cover every possible convolution variation (size, stride, dilation) and every possible MatMul configuration. Nor can in-memory analog compute implement the other 2300 Operations found in the universe of Pytorch models. Thus in-memory compute solutions also need to have full-fledged NPU computation capability in addition to the baggage of the analog enhancements to the memory – “enhancements” that are an area and power burden when using that memory in a conventional way for all the computation occurring on the companion digital NPU.

In the end analysis, in-memory solutions for edge device SoC are “too limited” to be useful for the intrepid chip designer Goldi.

Near Memory Compute: Near is still really very far away

At the other end of the spectrum of design approaches for SoC inference is the concept of minimizing on-chip SRAM memory use and maximizing the utilization of mass-produced, low-cost bulk memories, principally DDR chips. This concept focuses on the cost advantages of the massive scale of DRAM production and assumes that with minimal on-SoC SRAM and sufficient bandwidth to low-cost DRAM the AI inference subsystem can lower SoC cost but maintain high performance by leaning on the fast connection to external memory, often a dedicated DDR interface solely for the AI engine to manage.

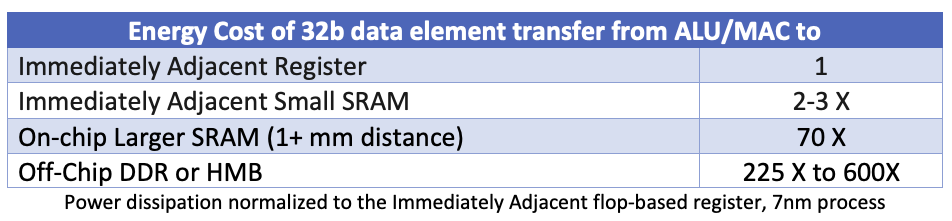

While successful at first glance at reducing the SoC chip area devoted to AI and thus slightly reducing system cost, near-memory approaches have two main flaws that cripple system performance. First, the power dissipation of such systems is out of whack. Consider the table below showing the relative energy cost of moving a 32bit word of data to or from the multiply accumulation logic at the heart of every AI NPU:

Each transfer of data to or from the SoC to DDR consumes between 225 to 600 times the energy (power) of a transfer that stays locally adjacent to the MAC unit. Even an on-chip SRAM a considerable “distance” away from a MAC unit is still 3 to 8 times more energy efficient than going off-chip. With most such SoCs power-dissipation constrained in consumer-level devices, the power limitations of relying principally on external memory renders the near-memory design point impractical. Furthermore, the latency of always relying on external memory means that as newer, more complicated models evolve that might have more irregular data access patterns than old-school Resnet stalwarts, near-memory solutions will suffer significant performance degradation due to latencies.

The double whammy of too much power and low performance means that the near-memory approach is “too hot” for our chip architect Goldi.

At-Memory: Is Just Right

Just as the children’s Goldilocks fable always presented a “just right” alternative, the At-Memory compute architecture is the solution that is Just Right for edge & device SoCs. Referring again to the table above of data transfer energy costs the obvious best choice for memory location is the Immediately Adjacent on-chip SRAM. A computed intermediate activation value saved into a local SRAM burns up to 200X less power than pushing that value off-chip. But that doesn’t mean that you want to only have on-chip SRAM. To do so creates hard upper limits on model sizes (weight sizes) that can fit in each implementation.

The best alternative for SoC designers is a solution that both take advantage of small local SRAMs – preferably distributed in large number among an array of compute elements – as well as intelligently schedules data movement between those SRAMs and the off-chip storage of DDR memory in a way that minimizes system power consumption and minimizes data access latency.

The Just Right Solution

Luckily for Goldilocks and all other SoC architects in the world, the is such a solution: Quadric’s fully programmable Chimera GPNPU – general purpose NPU – a C++ programmable processor combining the power-performance characteristics of a systolic array “accelerator” with the flexibility of a C++ programmable DSP. The Chimera architecture employs a blend of small distributed local SRAMs along with intelligent, compiler-scheduled use of layered SRAM and DRAM to deliver a configurable hardware-software solution “just right” for your next Soc. Learn more at: www.quadric.io.