A big reason purely electric car sales only reached 6% of new vehicle sales in Q3 2022 is the fear of running low on battery power and the lack of readily available fast-charging infrastructure. This “Range Anxiety” in a way parallels the semiconductor market, which has range anxiety problems, too.

Machine learning (ML) processing power is being built into new chip designs for nearly every end market. To build in ML capabilities, designers are using mixtures of programmable cores (CPUs, GPUs and DSPs) along with dedicated ML inference accelerators (NPUs) to run today’s latest ML inference models.

However, ML algorithms are rapidly changing as data scientists discover new, more efficient and powerful techniques. The ML benchmarks used today to evaluate new building blocks for SoC designs did not exist three or four years ago. The silicon being designed today to 2023 standards will be deployed in 2025 and 2026, at which time ML algorithms will change and improve. Changes made to ML models include the creation of fundamentally new ML operators and different topologies that rearrange known operators into ever deeper and more complex networks. The creation of new ML operators is a huge concern for SoC designers. What if today’s ML accelerator can’t support these new ML operators? The term “Operator Anxiety” describes the concerns that the accelerator selected now might not support future features. This anxiety is as powerful for chip designers as range anxiety is for electric car purchasers.

Today’s SoC architects typically pair a fully programmable DSP, GPU or CPU with an NPU accelerator for machine learning. NPU accelerators are typically hardwired for maximum efficiency for the most common ML operators, including activations, convolutions, and pooling. Most NPU accelerators have no ability to add new functions once implemented in silicon. A few offer limited flexibility for the IP vendor to hand write micro-coded command streams, but the actual software developer for the chip doesn’t have this flexibility.

With NPU accelerators, the CPU, DSP or GPU must be used to run the new operators in what is called a Fallback Mode. The challenge is that the CPU, DSP or GPU may be an order of magnitude slower than the NPU, particularly at running these types of operators. This is the source of the “Operator Anxiety.” Overall system performance usually suffers when the function must move out of the accelerator to run on a much slower processor.

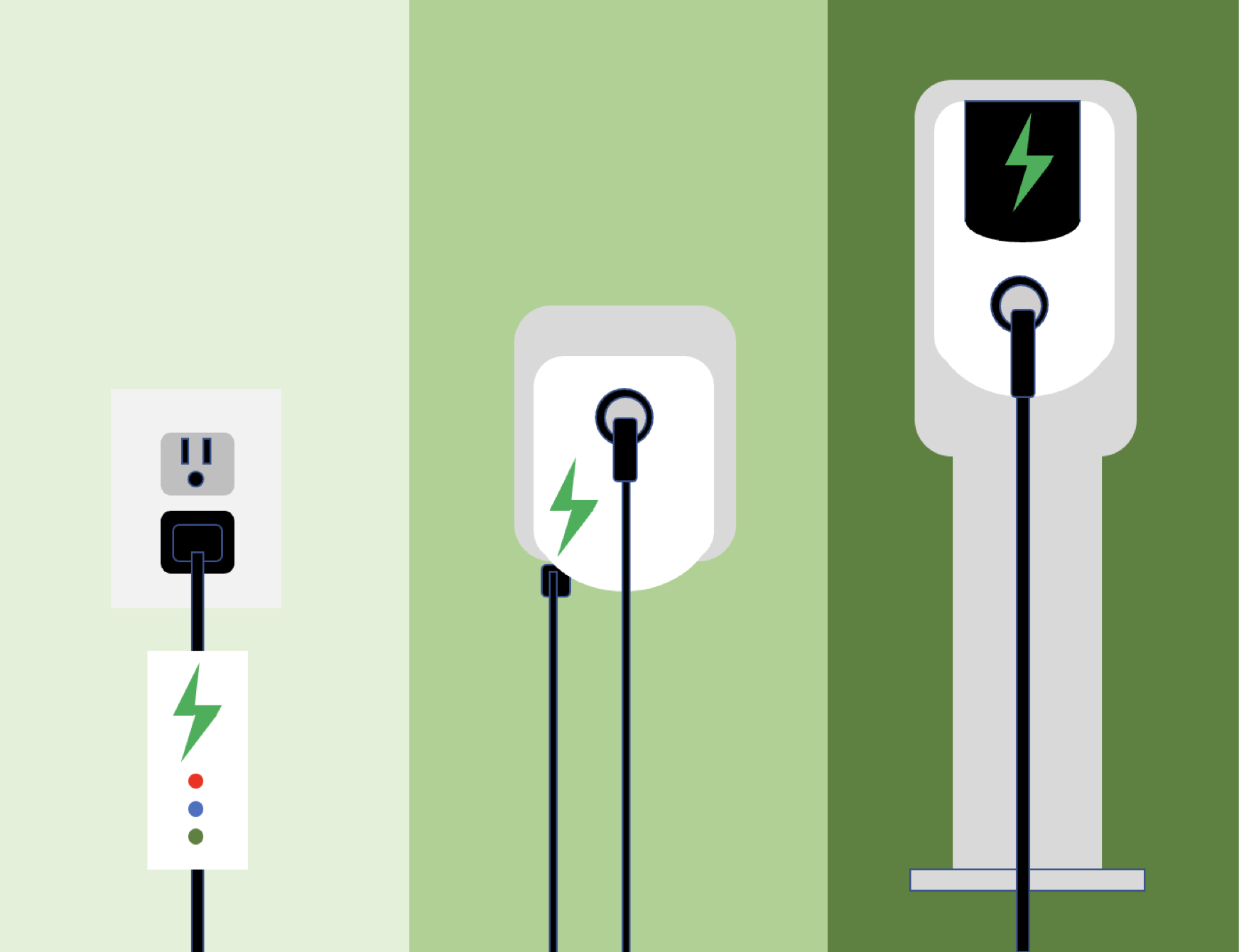

Just like electric car owners suffer significant delays when they must charge their cars with an extension cord plugged into a 110V wall socket, the chip performance suffers when a new ML operator must be diverted to a slow DSP or CPU.

Electric vehicle Range Anxiety can be solved by deploying a widespread network of fast chargers. Operator Anxiety can be solved by using a processor that can run any operator with the performance efficiency of a dedicated NPU.

Curing Operator Anxiety

Yes, there is a processor that can run any new operator with the performance efficiency of a dedicated NPU. The Chimera™ GPNPU from Quadric – available in 1 TOPS, 4 TOPS, and 16 TOPS variants – is that sought-after anxiety relieving solution. The Chimea GPNPU can deliver the matrix-optimized performance of an NPU optimized for ML while also being fully C++ programmable by the software developer, long after the chip is produced.

New ML operators can be written in C++ and will run just as fast as the native operators that come with the Chimera GPNPU. The Chimera core eliminates Operator Anxiety as there is no need to fallback to the CPU or DSP, even if there are significant improvements in operators or graphs in the future.